After building a Kubernetes cluster, I tried to migrate my ZNC installation from a stand-alone VPS to Kubernetes.

I created a PersistentVolume, PersistentVolumeClaim, Deployment and Service, and I now had a ZNC instance running with persistent storage (for configuration, logs and so forth).

The only problem was that my ZNC instance couldn't connect to the internet. That's kind of important for ZNC, which is an IRC bouncer, and literally has only two jobs:

- Accept connections from IRC clients.

- Open connections to IRC servers.

After some quick debugging with kubectl exec -it, I discovered that the Pod/container did actually have access to the internet, because I could ping public IP addresses. (Thank you CAP_NET_RAW.)

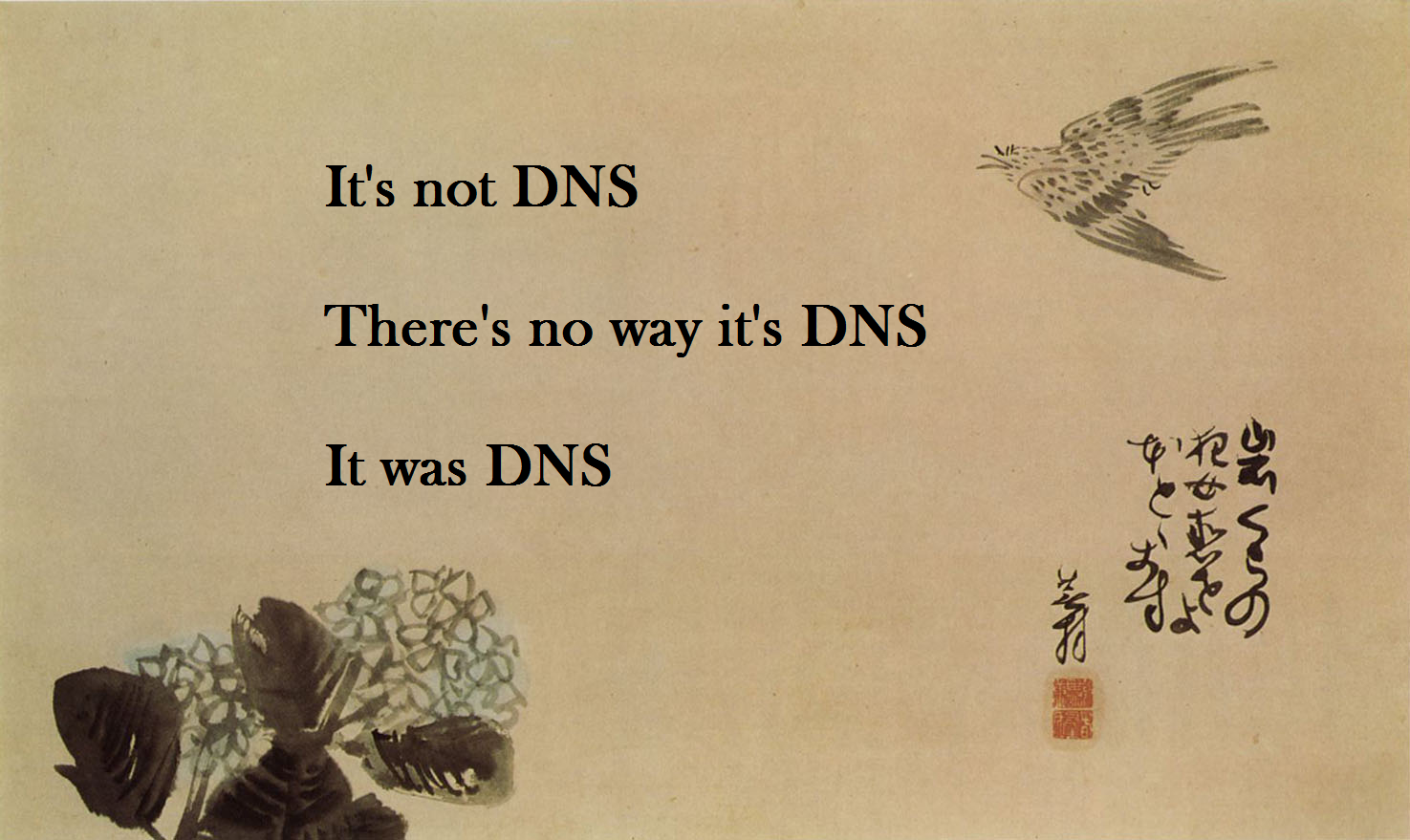

The problem was in DNS.

(Original source unknown, sourced from /r/sysadmin.)

After a couple hours of further debugging, I discovered the following:

-

All of my Kubernetes servers/VMs are running Ubuntu Server 17.10. Ubuntu uses systemd, and whilst I don't know much about systemd, I do know that it is heavily derided for being the exact opposite of the do-one-thing-and-do-it-well UNIX tooling philosphy.

In this case, systemd has it's own DNS resolver called

systemd-resolved. This replaces the traditional resolver, and/etc/resolv.confredirects applications to use this instead.resolv.conflooks like this (below).If you do run

systemd-resolve --status, it lists a whole bunch of stuff you probably don't care about, including what your real upstream DNS servers are.

# This file is managed by man:systemd-resolved(8). Do not edit.

#

# 127.0.0.53 is the systemd-resolved stub resolver.

# run "systemd-resolve --status" to see details about the actual nameservers.

nameserver 127.0.0.53

- Kubernetes has it's own DNS resolver too, running inside a pod named

kube-dns. Inside a pod,resolv.conflooks like this:

nameserver 10.96.0.10

search default.svc.cluster.local svc.cluster.local cluster.local

options ndots:5

-

Kubernetes configures it's own DNS server to use the host's

/etc/resolv.confas an upstream for anything that it cannot resolve. -

Since

127.0.0.53is part of the127.0.0.0/8range, it is the same as127.0.0.1, i.e.,localhost. -

Thanks to the default kernel namespaces provided by Docker/Kubernetes,

localhostinside of a Pod/container is not the same aslocalhoston the host machine.

Of all of this, (5) is probably the most critical. If we zoom out to have a look at what happens when we make a DNS request:

- The pod looks at

resolv.conf - The pod makes a DNS request to

10.96.0.10, which is thekube-dnspod. kube-dnscan't handle the request, so it sends it to an upstream DNS server,127.0.0.53- Since

kube-dnsis running inside a kernel networking namespace, it doesn't have access to the real127.0.0.53:53address on the host. It's DNS requests stay within the pod, and are never answered.[1]

The solution to this is to bypass systemd-resolverd and get kube-dns to talk to a DNS server that it can actually reach.

By default, Kubernetes supplies kube-dns with upstream DNS server addresses from the host's /etc/resolv.conf, however this is configurable. We can change these addresses by providing a ConfigMap for the kube-dns pod.

Here's a simple one that just uses the primary Google DNS resolver:

apiVersion: v1

kind: ConfigMap

metadata:

name: kube-dns

namespace: kube-system

data:

upstreamNameservers: |

["8.8.8.8"]

If you install this with kubectl apply -f myconfig.yaml[2], then kube-dns will be able to actually forward recursive DNS requests upstream. Your pods should now be able to happily resolve names to make connections to the Internet.