A few years ago, as I have hinted to previously, I worked on a project to automate the creation of our CI/CD agent virtual machines at work: starting with a blank slate each time, installing Windows, then installing and configuring all the third-party and first-party software needed to make the thing tick.

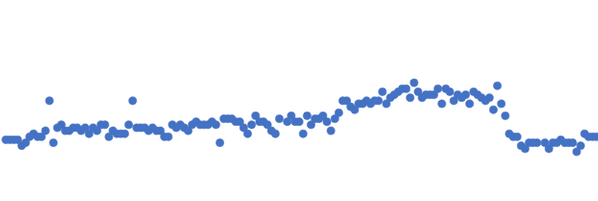

At the time, I got it all working with Windows Server 2016, but after we deployed it to production we noticed that the compilation times for our flagship software were approximately 3x what they were previously. This was quite problematic as it gummed up our CI/CD queues and everything came grinding to a halt. If you plot our average build times over time on a graph, you get a very noticeable hump during the rollout and then a drop as we rolled back:

The previous set of VMs were all running Windows Server 2012 R2, so we rolled back to that operating system while we tried to figure out what had gone wrong. We tried a bunch of things, including comparing builds of other large projects such as Roslyn, but we couldn't reproduce it on other codebases and never did figure it out... until now.

With Windows Server 2012 R2 hitting end of life quite a while ago, we really needeed to update our base OS. Now that Windows Server 2019 is out, I tried to give it a turn and figure out where this problem lies. In the two years or so since the previous attempt I had also come across new debugging tools that I felt would help me out, so I was confident that I could figure this one out.

First Hurdles

After downloading a Windows Server 2019 ISO and spinning up a new VM with it, I booted up our internal CI agent, and... it panicked. Not quite like a kernel panic, but still a critical startup failure - it couldn't find the system RAM disk.

All our build servers have a RAM disk that lets some file operations never touch an actual file storage device. Its existence predates my involvement with our developer tooling, and possibly my entire employment, but the theory went that by keeping things in RAM, it would be faster than hitting mechanical spinning disks (we didn't have SSDs back then) and since CI/CD is very file-intensive - constantly downloading, compiling, and deleting code - it would also avoid heavy contention and wear and tear on them.

So why couldn't it find the RAM disk? After debugging it for a bit, I figured out that the detection code was looking for a drive that either Windows recognised as a RAM disk, or that was kinda small and passed a quick on-the-fly benchmark. The RAM disk driver presents itself as a "fixed" drive, not a "RAM" drive, but on the old 2012 R2 build servers it clocked in at about 2000 GB/s writes on the benchmark.

The new server was only getting 80 MB/s.

To cut to the chase on this one, the problem was Windows Defender. After adding the neccesary exceptions, we were back at 2000 GB/s, and the build agent started up very happy that it could find its precious little RAM disk.

Analyzing Build Times

Now that the build agent was up and running, it was time to run a build of our flagship software and see how long it took. Unsurpsingly, it was still taking roughly as long as under Windows Server 2016 - about 3x as long as our current production runtimes, and still far too long to be acceptable.

I assumed at this point that since we were seeing it in our codebase, but not in third-party open-source projects that we used for comparison, there must be something strange and custom that we were doing. Suspicion immediately fell on the custom post-build step in each .csproj file that runs localization analysis, but I needed proof before I could start pointing the blame at things.

The first thing I did was produce a custom build of our toolchain that would log each step of the build process and its duration. I ran this twice - once on an existing production server, and once on my new experimental server - and compared the outputs.

Very strangely, the .csproj builds at the start of the build were taking the expected amount of time, but the ones at the very end of the build had blown out to many many times their expected duration. Interesting.

To get to the bottom of this, I produced another custom build of our toolchain. This time whenever we invoke msbuild.exe, I added a command-line flag. /bl, or -binaryLogger in its longer form, is a fairly recent new feature that:

Serializes all build events to a compressed binary file... The binary log is a detailed description of the build process that can later be used to reconstruct text logs and used by other analysis tools. A binary log is usually 10-20x smaller than the most detailed text diagnostic-level log, but it contains more information.

If I take these binary logs and feed them into the wonderful MSBuild Log Viewer, it shows me some really nice details about the internals of the build process, including each individual MSBuild Task, and most critically for my needs, each individual Task's duration.

Doing the same comparative analysis as before - one build on an existing fast server and one on the new strangely-slow one - it pointed the blame very squarely at the ResolveAssemblyReferences task.

This was very surprising to me, because that is not some special custom task that is unique to my company codebase. That is a bog-standard task from Microsoft whose job it is to, essentially, look at all of the assembly names that a project references and find the matching .dll files.

The fact that this is the slow point and that it gets slower and slower further into the build process - where we have already built many DLLs and our projects start to reference more and more assemblies - suggested to me that this was some kind of O(N) (or greater, perhaps O(N^2)) problem relating solely to the filesystem. If this is true, then that would explain why we never saw it in third-party codebases - most open-source codebases, even Roslyn, only produce a handful of DLL files, not the literally hundreds (like 600 or more) that comprise our flagship software.

Scrutinising the RAM disk

Narrowing down the problem to an I/O issue made me immediately suspect the RAM disk. Although we had added Windows Defender exclusions, there was clearly something else at play.

Furthermore, this would explain why we were getting slower builds in CI/CD but not on developer PCs, which had been running the workstaion equivalents of Windows Server 2016/2019 (i.e. Windows 8, 8.1, and 10) for many years without the builds slowing down, as the developer PCs do not have that RAM disk driver installed.

The next step was logically to start a spreadsheet and run some more benchmarks. I put together a test matrix for how long it took to build our server on each operating system (Windows Server 2012 R2 vs 2019), whether the RAM disk was used or not (yes vs no), and what storage fabric the VM's disk was hosted on (SAN vs SSD vs HDD).

Somewhat surprisingly, in every single test result, our flagship software built faster without the RAM disk than with it. Even on the existing servers running Windows Server 2012 R2, even on a spinning mechanical hard disk, compilation times decreased by upwards of approximately 30%(!!) simply by not using the RAM disk.

By removing the RAM disks from the fleet, we could speed up our builds immediately, as well as unlock the ability to upgrade our operating system without negatively impacting performance.

Conclusion

I think the moral of the story is pretty clear here.

- When introducing changes that you think will bring performance improvements, run benchmarks to prove it.

- When you suspect a component is causing performance problems, run benchmarks and analysis to prove it. Had I gone digging into our custom post-build task instead of the actual task that was taking longer, I would probably never have solved this.

- The MSBuild Binary Log is freaking awesome and if you ever need to understand what is happening under the hood of building your .NET project, try it. My hat's off to everyone involved in the development of it.

I still don't know why the RAM disk was slower than a virtual disk backed by a HDD - I suspect a lack of caching in the filesystem driver, or perhaps even though it is really fast to access content it was really slow at handling file metadata - and I don't think I ever really will know.